My name is Max Kirby, and this case study documents my experience conducting a small-scale, three-player, unmoderated playtest of “Axom:Conquest” a tower defense game currently available in demo form on Steam. The primary objective was to identify player pain points and uncover opportunities for improvement, providing valuable feedback for the developer, Thomas Capstick. A comprehensive UX Report is available for further detail.

Figure 1: The Logo for Axom: Conquest

Axom: Conquest is a roguelite tower defense game set on the hostile planet of Axom, where players must build, upgrade, and defend their base against relentless alien swarms. Blending base-building strategy, wave-based combat, and long-term progression, the game challenges players to gather resources, unlock research, and discover powerful synergies to survive increasingly difficult assaults. The demo offers a taste of the experience with the first 25 waves, two unique biomes, and dozens of structures and upgrades, with far more awaiting in the full release.

This case study presents my methodology, key findings, recommendations, and reflections, highlighting the lessons learned and the strategies that can inform future playtests.

Methodology

I discovered Axom: Conquest through a Reddit post on r/PlayMyGame where developer Thomas Capstick shared that he had been working on the game in his spare time while holding a supermarket job. The honesty and passion behind the post immediately caught my attention, and I was intrigued by the premise, a roguelite tower defense game set on the hostile planet of Axom. In June 2025, I reached out to Thomas to discuss running a small playtest.

Figure 2: Thomas Capsticks’ Reddit Post on Wanting Some Feedback on Their Game

From our conversations, Thomas explained that the game had only been tested by friends and family so far, and he was eager to see how fresh players would respond. His primary goals were to understand how intuitive the experience is for first-time players and to identify pain points around difficulty balance, whether the game felt too easy, too hard, or frustrating in unexpected ways. He also expressed interest in any additional insights that could highlight opportunities to improve the overall player experience.

To prepare, I played through the demo myself to gain familiarity with the mechanics, structure, and pacing. This helped me anticipate possible onboarding issues and informed the survey design. Together, we agreed on a 30-minute minimum session length, which matched the typical length of a run, and decided to recruit a mix of casual, midcore, and hardcore strategy/tower defense players to capture a diverse range of perspectives.

The playtest was conducted with three unmoderated participants, combining recorded gameplay, surveys, and observational analysis. This approach not only captured direct feedback, but also revealed moments where players hesitated, misinterpreted instructions, or struggled with mechanics such as building defenses or managing resources. These findings became the basis for identifying key pain points and suggesting actionable improvements.

Designing My Study Plan

I created a detailed study plan to structure my playtest, see:

Axom: Conquest – Study Plan

My Plan Included:

● Player Introduction: A structured introduction was developed to ensure participants were adequately prepared for the playtest. This section provided step-by-step instructions for downloading and launching the game and explained that the session would be unmoderated, meaning players would engage with the prototype independently. It also established expectations for think-aloud protocols, where participants verbalise their thoughts during gameplay. Furthermore, the introduction detailed technical requirements (e.g., working microphone, quiet environment) and outlined informed consent, clarifying that both gameplay and voice data would be recorded for analysis while maintaining participant anonymity.

● Moderation Table: The study plan incorporated a “During the Session” table designed to guide observation and data collection. This framework identified research objectives, behavioural indicators to monitor, and follow-up questions to probe specific experiences. For example, signs of disengagement (e.g., quitting after losses or skipping upgrades) were linked to questions such as “Would you continue playing this in your own time?” Similarly, observations of pacing issues (e.g., rushing through levels or extended idle moments) were connected to questions like “Did the game feel too fast, too slow, or just right?” This systematic approach ensured consistency in capturing both observed behaviours and self-reported perceptions.

Figure 3: Example of my Moderation Table, Depicting What I Want to Learn, What to Watch Out For, and What I Could Ask in the Survey Based on This.

● Survey: Following the playtest, participants completed a 17-item post-session survey designed to capture both quantitative ratings and qualitative reflections. The survey evaluated multiple dimensions of the player experience, including early engagement, pacing, clarity of rewards and progression systems, tower diversity and strategic depth, control intuitiveness, UI usability, enemy recognition, and frustration or fairness perceptions. By combining Likert scale measures with open-ended questions, the survey generated data that was both comparable across participants and rich in contextual detail.

● Focus Areas: The study focused on uncovering pain points in the first 30 minutes of Axom: Conquest, particularly around onboarding clarity, core loop engagement, reward feedback, strategic choice-making, pacing and progression, controls and UI, and enemy design clarity. These areas were prioritised because they are most likely to cause frustration or disengagement, and the study plan combined observations with survey questions to capture both where players struggled and how it affected their experience.

This study design offered several methodological advantages. By combining naturalistic observation of unmoderated play with structured reflection through a survey, the approach balanced ecological validity with research rigor. The integration of both behavioural indicators and self-reported measures provided a comprehensive view of player experience, ensuring that findings could meaningfully inform both iterative design improvements and longer-term development priorities for Axom: Conquest.

Conducting My Playtest:

To conduct the playtest for Axom: Conquest, I collaborated with PlaytestCloud, using their platform to recruit three participants. Following the developer’s request, I targeted players with prior interest in tower defence and strategy games, while ensuring a balanced mix of casual and more dedicated (midcore/hardcore) players. As the developer did not specify an age or gender preference, I opted for a neutral gender split (two male, one female) to maintain inclusivity within the sample.

The developer emphasised two primary research goals:

- Identifying pain points — where players might become frustrated, confused, or discouraged.

- Generating improvement ideas — gathering player-driven suggestions to enhance the overall experience.

I submitted the playtest request shortly after the developer’s 0.8.0.2 update was released, to ensure participants were engaging with the most recent version of the demo available on Steam. Each participant was asked to play for a minimum of 30 minutes (roughly the length of a full run), with the flexibility to continue if they were especially engaged.

This session was designed as an unmoderated study. Players completed the test independently in their own time, while their gameplay and audio commentary were recorded. This structure allowed participants to behave more naturally, closer to how they would if they discovered the game themselves. After the session, players completed a 17-question post-playtest survey designed around the developer’s goals, focusing on engagement, pacing, clarity of feedback/rewards, tower balance, enemy differentiation, controls, and moments of frustration.

Before beginning, participants were reminded of the following:

- The session was about testing the game, not them as players.

- Their thoughts, reactions, and feelings were most valuable when vocalised in real time.

- Their participation would remain anonymous, and their feedback would only be used for research and reporting purposes.

The combination of recorded gameplay, think-aloud commentary, and structured survey responses provided both qualitative insight (moments of hesitation, frustration, or delight observed directly in play) and quantitative trends (engagement ratings, clarity of feedback, control intuitiveness, etc.).

Together, these methods ensured the developer would receive a rounded view of where Axom: Conquest succeeds, and where adjustments might improve accessibility, pacing, and long-term player satisfaction.

My Data Analysis Process:

● Recording Review & Note Taking: As this was an unmoderated study, I reviewed the gameplay recordings of all three participants in full. While watching, I took structured notes on player behaviour and commentary, focusing on moments of confusion, visible enjoyment, frustration, and curiosity. For clarity, each participant’s notes were stored separately, capturing both direct quotes and behavioural observations. This allowed me to track individual experiences before later synthesising shared themes across the group.

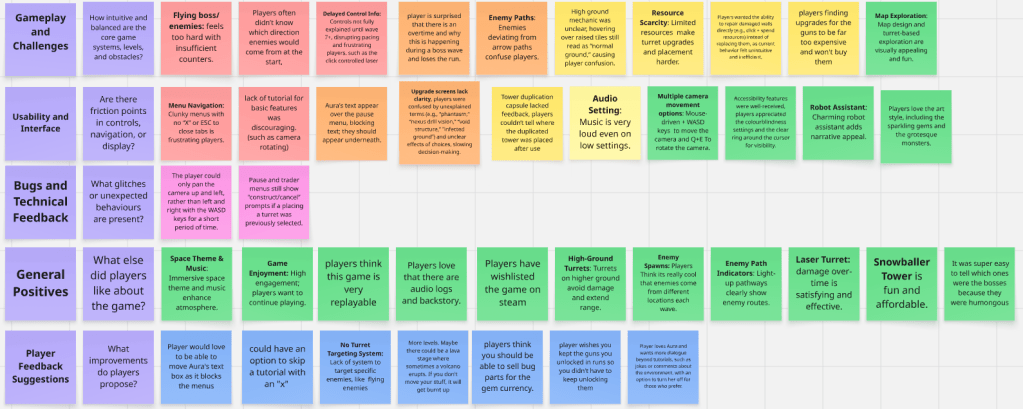

● Organising Data in Miro: Once the recordings were reviewed, I transferred my notes into a Miro board for analysis. Each observation was converted into a sticky note and categorised under key themes such as Gameplay and Challenges, Usability and Interface, Bugs and Technical Feedback, etc. I then colour-coded each note by severity level (Positive, Low, Medium, Serious, Critical). This structure helped me quickly identify recurring issues across players while keeping the full dataset accessible for reference. (See Attached Miro Board)

Figure 4: Miro board used to categorise playtest feedback by theme and severity.

● Survey Analysis: In addition to the recordings, I analysed the post-playtest survey responses. Since the survey was pre-prepared, it enabled me to capture both quantitative data (1–5 scale ratings) and qualitative feedback (open-ended responses). This provided a useful second layer of data: survey responses often validated behavioural observations from the recordings, or highlighted perceptions that were less clearly verbalised during gameplay.

Because the dataset was relatively small, I chose not to create graphs from the ratings, instead focusing on comparing trends across participants manually. The free-text answers were integrated into the Miro board alongside my observational notes, ensuring that each theme was supported by both what players did and what players reported.

● Revisiting Recordings & Visual Evidence: In addition to reviewing the recordings for accuracy, I also revisited them to capture screenshots of key moments for the developer. These visual examples were useful in highlighting issues such as points of confusion or UI clarity concerns, giving the feedback greater context.

Normally, I would timestamp my notes during the initial review to make this process more efficient. However, on this occasion I did not, which meant revisiting the footage took longer than expected. This highlighted the importance of maintaining timestamps in future studies, as they not only speed up the screenshot process but also make cross-referencing survey responses and behaviours more precise.

Compiling My Report:

I compiled all of my findings into a comprehensive UX report titled “Axom: Conquest – UX Report,” structured around the key focus areas of the study. The report featured a severity key to help prioritise issues, along with player paraphrases and screenshots from the unmoderated sessions to support and illustrate my observations. I shared the report with the developer via Reddit, highlighting key points and offering to clarify anything or discuss the findings further via call if needed. They appreciated the feedback and indicated they would take their time reviewing it and addressing the most critical issues.

Figure 5: Severity key used to categorise playtest findings for “Axom: Conquest,” defining Positive, Critical, Serious, Medium, and Low issues

Key Findings from My Playtest:

Gameplay and Challenges:

Positives (slide 8):

- Turrets on higher ground avoided damage and extended range, which players found intuitive.

- Map design and turret-based exploration were visually appealing and fun.

- Enemy spawn variety (different locations per wave) added excitement.

- Light-up pathways for enemy routes were praised as visually clear.

Critical:

● Flying enemies and bosses felt disproportionately difficult because the game offered no clear anti-air defenses and provided no warning before their arrival. Players often lost suddenly when these enemies bypassed defenses, creating frustration and a sense of helplessness. (Slide 9)

● Enemy path indicators were misunderstood due to lack of explanation. While glowing lines marked enemy routes, players didn’t realise their purpose. This led to misplaced defenses and quick losses, creating early frustration and repeated failure. (Slide 10)

Figure 6: Enemy Path Indicators are There, But Not Mentioned to the Player

● Key mechanics were introduced too late, such as the click-controlled laser and camera rotation. By the time players discovered them, pacing had already been disrupted, and the late explanations left them confused and disengaged. (Slide 11)

Figure 7: The Player Doesn’t Get Told About the Click Laser Until Wave 4

Serious:

● Boss waves and overtime mechanics were unclear and poorly signposted. The skull icon meant to indicate their arrival was too vague, and without prior hints, players were caught off guard by sudden spikes in difficulty, often leading to abrupt defeats. (Slide 12)

Figure 8: Player Losing Run During a Boss Wave Overtime

● Enemies sometimes deviated from arrow-marked paths without explanation, undermining the trust players had in the route indicators. This caused wasted resources, misplaced defenses, and sudden strategic failures, which many players described as unfair. (Slide 14)

Figures 9 and 10: Figure 9 Shows the blue arrows marking the enemies pathway, whereas figure 10 shows where the enemies (marked by red arrows) are deviating from the path, ultimately targeting the hole in the defences.

Medium:

● High-ground tiles mislabeled as “normal ground,” which confused players after the tutorial mentioned high-ground advantages. Because the labels didn’t match expectations, some players ignored these tiles or placed towers incorrectly, leading to suboptimal strategies.(Slide 15)

Figure 11: Higher Ground States That it is Just “Normal-Ground”

● Tutorial failed to explain that map exploration yields resources, and refinery introduction was hidden.

● Players couldn’t repair walls directly; lack of guidance forced inefficient wall-doubling.

● Gun upgrades felt too expensive relative to benefits.

Usability and Interface

Positives (Slide 20):

● Flexible camera controls (mouse, WASD, Q+E).

●Accessibility features (colourblind settings, cursor ring) were well received.

●Aura, the robot assistant, added narrative charm.

● Vibrant art style with sparkling gems and grotesque monsters was praised.

Critical:

● Menu navigation was clunky and frustrating. The “X” button to close menus was hidden behind icons, and the right-click cancel mechanic was never explained. This forced players into trial-and-error, making basic navigation cumbersome and pulling focus away from the core gameplay. (Slide 21)

Figure 12: “X” Button to Close the Menu is Hidden Behind the Icon with an Arrow Pointing to It

● Basic features were never taught. Essential controls like camera rotation were assumed to be known, but for new players, the lack of tutorials left them feeling lost and excluded. This discouraged early engagement and created unnecessary barriers. (Slide 22)

Serious:

● Aura’s dialogue box frequently overlapped menus, obscuring critical text and resource information. Players often couldn’t read key details, which led to confusion and broke immersion. (Slide 23)

Figure 13: Aura’s Text Pop-Up is Blocking Key Text on the “Trader” Menu

● Upgrade screens relied on vague and confusing terminology. Terms like “phantasm” or “void structure” lacked explanation, forcing players to guess at the impact of their choices. This slowed down decision-making, increased frustration, and diminished satisfaction with progression. (Slide 24)

Medium:

- Duplication capsule provided no feedback about where duplicated towers appeared. (Slide 25)

Low:

- Music volume scaling was overly loud even at low settings. (Slide 26)

Bugs and Technical Feedback:

Low:

● Temporary camera panning restriction occurred after rapid WASD input, momentarily locking navigation. (Slide 28)

● Persistent “construct/cancel” prompts stayed on screen after switching menus, confusing players and cluttering the UI. (Slide 29)

Figure 14: Construct/ Cancel UI Prompt in the Trader Menu

General Positives (Slide 31):

- Immersive space theme and music enhanced atmosphere.

- Gameplay was highly enjoyable and addictive.

- Replayability was strong; players wanted multiple playthroughs.

- Audio logs and backstory added narrative depth.

- Players wishlisted the game on Steam.

Player Suggestions (slide 33, and slide 34):

- Make Aura’s text box movable to avoid blocking menus.

- Add turret targeting system (e.g., prioritise flying enemies).

- Include more levels (e.g., lava stage with volcano hazards).

- Allow bug parts to be sold for gem currency.

- Add permanent weapon unlocks across runs.

- Expand Aura’s dialogue (jokes, environmental comments) with option to disable.

- Add tutorial skip button.

My Reflections and Lessons Learned:

One of the biggest lessons I took away from this playtest was the need to add timestamps when note-taking. While reviewing the recordings, I often had to scrub back through long playtest sessions to find the exact moment where a reaction or issue occurred. This slowed down my analysis and made it harder to cross-check details later. By logging timestamps alongside my notes, I’ll be able to reference moments quickly and precisely, making the process far more efficient in future playtests.

Aside from this, I felt the overall structure of the playtest and the reporting process went very smoothly. The framework I used for categorising positives, critical, serious, medium, and low issues proved effective in highlighting what mattered most, and players’ feedback was both rich and actionable. Overall, I’m confident in the process I used here and only see small refinements, like timestamping, to make the next iteration even stronger.

Developer Testimonial:

Thomas Capstick, the developer of Axom: Conquest, shared the following testimonial after working with me on the player experience:

Kirbylife was a pleasure to work with while playtesting my game Axom: Conquest. He explained everything clearly and was very polite without pushing for me to do things a certain way. He was very patient when I needed more time to get my game update finished.

He produced a very professional case study about my game detailing lots of things I would have never thought of otherwise. His analysis was very in depth and didn’t miss even minor details.

I’m happy to say I think my game will be greatly improved with his help and I’m very glad to have worked with him.

He was very timely with communication as well.

Conclusion:

This playtest of Axom: Conquest successfully achieved its goals: identifying key pain points, highlighting what resonated most with players, and providing actionable insights for the developer. While players praised the game’s art style, atmosphere, and core gameplay loop, they also encountered significant usability and clarity issues, particularly around tutorials, navigation, and enemy design communication. These findings emphasise the importance of clear onboarding and consistent feedback to support long-term engagement in a complex strategy game.

From my perspective as a researcher, the process reinforced the value of a structured framework for capturing feedback and the importance of refining my own workflow, most notably by adding timestamps to improve efficiency in future analyses. Overall, the study not only produced rich, actionable insights for the developer but also strengthened my own practice as a UX researcher.

I’d like to thank Thomas Capstick for trusting me to run this playtest and for being so open to feedback throughout the process. His passion and dedication to Axom: Conquest were clear at every stage, and I’m confident the improvements inspired by this study will help the game reach its full potential.

Leave a comment